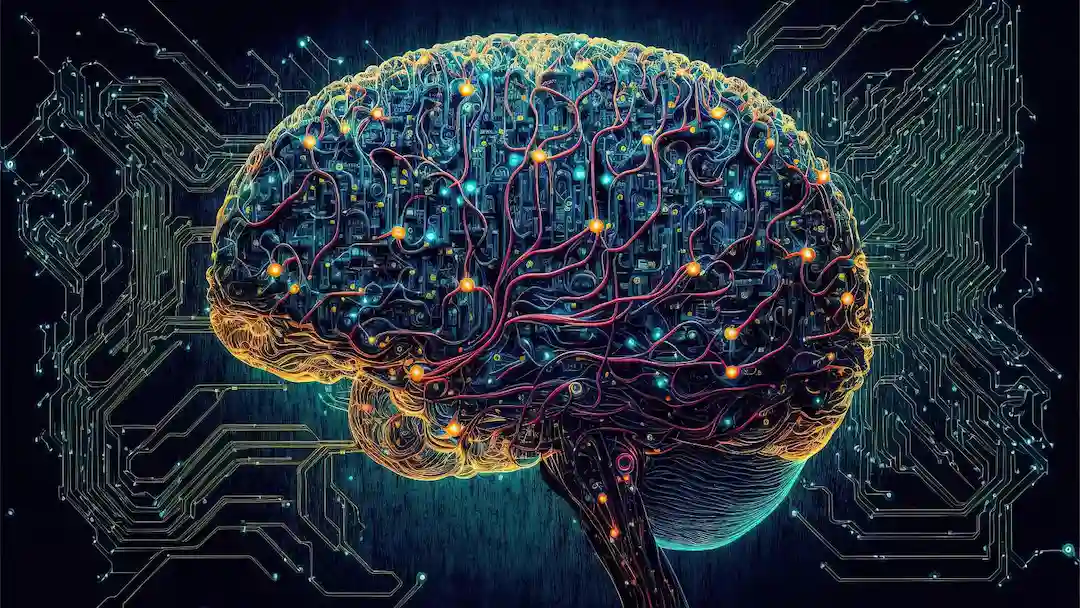

The realm of deep learning, a formidable branch of artificial intelligence, has ushered in groundbreaking advancements across diverse domains such as computer vision, natural language processing, and robotics. However, sculpting and training high-performing deep neural networks (DNNs) is akin to the art of taming a wild beast. In this pursuit, indispensable tools such as hyperparameter tuning, regularization, and optimization techniques act as guiding forces, steering the network toward precision and resilience.

Hyperparameter Tuning: Orchestrating the Symphony of Learning

Conceptualize DNNs as intricate machines equipped with myriad dials and levers. These levers, referred to as hyperparameters, exert control over how the network learns and performs. Examples include the learning rate, influencing the speed at which the network adapts its weights based on incoming data; the configuration of neurons and layers in the network architecture; and the selection of activation functions, injecting non-linearity into the system. Identifying optimal values for these hyperparameters is pivotal, as suboptimal configurations may lead to sluggish learning, inadequate generalization, or even catastrophic failure.

The process of hyperparameter tuning entails systematically exploring various combinations of values and assessing the corresponding network performance. Techniques like grid search, random search, and Bayesian optimization automate this exploration, facilitating efficient navigation of the hyperparameter space. For instance, when tuning the learning rate, the exploration may involve experimenting with values such as 0.01, 0.001, and 0.0001, ultimately selecting the one minimizing the model’s error on a validation dataset.

Regularization: Subduing the Overfitting Menace

DNNs often grapple with overfitting, a phenomenon where they memorize training data excessively, hindering their ability to generalize to new instances. Regularization techniques come to the rescue by penalizing the network for harboring complex structures or large weights. This discourages the network from overly tailoring itself to specific training data points, fostering the acquisition of broader patterns and enhancing performance on unseen data.

A prevalent regularization technique is L2 regularization, introducing a penalty term to the cost function based on the sum of squared weights in the network. This discourages the proliferation of excessively large weights, promoting simpler and more generalizable models. Another technique, dropout, randomly deactivates some neurons during training, compelling the network to rely on alternative neurons and preventing overfitting to specific data features.

Optimization: Navigating the Learning Odyssey

The training of a DNN involves the continual adjustment of its weights based on errors encountered during the training process. Optimization algorithms steer this weight update process, determining the direction and magnitude of changes. The selection of an appropriate optimizer is pivotal for ensuring efficient and effective learning.

Renowned optimizers include gradient descent and its variants, which follow the steepest slope of the error surface to minimize errors. Other optimizers, such as Adam and RMSprop, dynamically adjust the learning rate for different parameters, enhancing convergence and robustness. Optimal optimizer selection hinges on factors such as network size and complexity, data characteristics, and desired learning behavior.

Synthesis: Illustrating the Journey with an Example

Consider constructing a deep neural network tasked with classifying handwritten digits. The journey commences by defining the network architecture and selecting hyperparameters like the number of layers and neurons. Subsequently, regularization techniques such as L2 are employed to counter overfitting. While training, an optimizer like Adam is utilized to fine-tune the network’s weights based on errors encountered during the training phase. Hyperparameter tuning involves methodically exploring diverse combinations of learning rates, activation functions, and other settings, evaluating the network’s performance on a validation dataset to pinpoint the optimal configuration.

By judiciously applying hyperparameter tuning, regularization, and optimization techniques, the once unruly DNN transforms into a disciplined and accurate machine, poised to tackle the challenges of real-world problems. It’s an iterative process, demanding experimentation and refinement to achieve optimal outcomes. Unleash your inner tamer, seize the tuning knobs, and embark on the enthralling journey of deep learning mastery!

Note: This serves as a foundational narrative, and there is room for expansion, delving into specific techniques, providing deeper mathematical explanations, or presenting real-world case studies showcasing the successful application of these methods.